Why would slow fades and color changes be needed?

LEDs are often used to form the building blocks of a lighting system, whether this be a building façade, a theme park, a digital sign, or many of the amazing lighting designs found in stage and theatre applications.

The requirements of these applications can be very demanding, and the LEDs need to be able to perform to very specific design requirements.

In many cases, slow and gradual lighting effects are desirable, rather than rapid movements. This then means that executing a fade to 0% from a given intensity level (or from 0% to a given intensity) or changing from one color to another nearby color over an extended time (e.g. tens of seconds) is a critical requirement for the LEDs themselves and the overall system.

This requirement is more prevalent in theatrical applications, where these fades and changes are used in slow moving scenes, or for transitions between cues. These subtle changes help the lighting designer to create their desired design and communicate an emotion or story.

This seemingly simple requirement can bring its challenges, particularly if the data format chosen and the pixel LEDs used are 8-bit. When 8-bit is used, a slow fade or color change may not appear as intended, simply because the resolution isn’t high enough. It may even look like the frame rate of the system is too low or that frames are being dropped, when the real issue may be to do with resolution.

What problems arise with long fade times in digital lighting?

A lighting system is made up of many components, with many specifications. One of these specifications is the color resolution that is used for DMX data.

A single DMX channel consists of 8 bits of information and is often used to represent the intensity of a single channel. A single 8-bit channel to control intensity of a single color within a single pixel, this gives 256 levels of intensity per channel. If using 16-bit, on the other hand, this gives 65,536 levels.

When contemplating the resolution of dimming, the entire data chain from the lighting source to the LED needs to be considered.

The source of lighting data normally sends 8-bit data by default but may be able to support 16-bit data. In the context of a pixel system, this 8 or 16-bit data is received by a PixLite via DMX512, Art-Net or sACN. The PixLite will then use this data to produce an outgoing pixel data stream.

The pixels themselves also have a specification for color resolution, which is set by the specific pixel protocol. 8-bit and 16-bit pixels are the most common, but it could be something else like 12-bit.

Regardless, they will have a finite number of intensity levels that can be used for PWM dimming, based on the color resolution of the pixel protocol.

You can read more about PWM Dimming in the article: Refresh Rate vs PWM Rate.

The problem with using 8-bit pixels and driving these from an 8-bit data source, is that if you’d like to perform a long duration fade or slowly change the color, the number of intensity levels available will reduce the visual aesthetic of this fade, producing an effect that appears “steppy” and as if the colors are deviating from what you’ve intended.

We’ll explore these issues in more detail below, and explain what solutions are available to allow you to build a pixel system that allows long fades to be used in your lighting design.

Quantization and Frame Rates

A resolution of 8 bits means only 256 intensity levels are available which, in the context of a long fade, isn’t many.

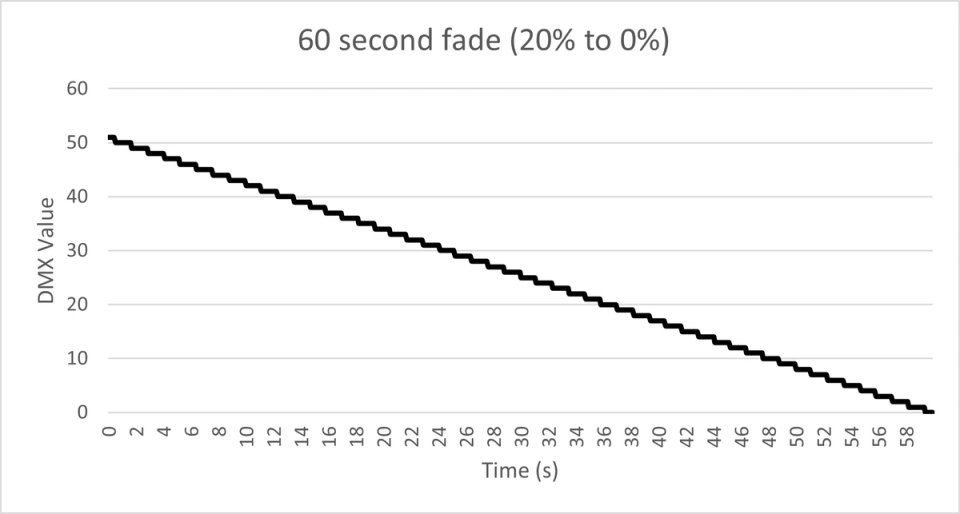

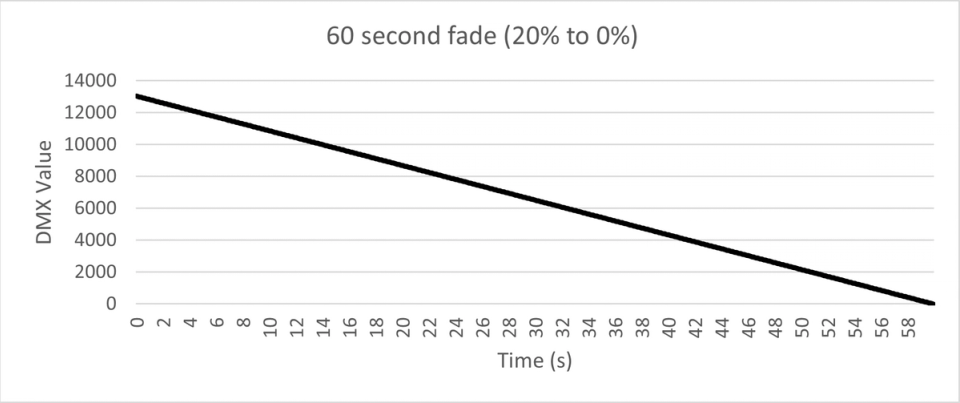

Imagine a subtle scene where the LEDs are only set to a dim 20% white, and this slowly fades down to 0% over 60 seconds during a scene change. In the world of DMX, this means that red, green, and blue are fading from DMX value 51 down to 0 over a duration of 60 seconds.

The data values only effectively change at a rate of 0.85 times per second. Note that this is independent of your “frame rate” which is the rate at which data frames are sent from the lighting software to the PixLite and from the PixLite to the pixel LEDs.

In other words, the LEDs will be changing slower than once per second, even if you have (for example) a 30 or 60 FPS source of data.

This effectively lowers the frame rate to less than once per second, which is very noticeable to our human eyes.

This is often described by viewers as “steppy”, as the light is noticeably stepping down, instead of a smooth fade. You can see this in the example graph shown below.

Long Fade of Dim White to Off

The key thing to understand is that the DMX levels are discrete, or in other words, specific whole number values.

For example, even if the user interface of your source of lighting data appears to be able to fade down from 20% to 19.9%, the actual DMX values being streamed will instead map to DMX intensity value 51, simply because there are a finite number of intensity levels (256 in the case of 8-bit data).

So, as your system performs what you think would be a smooth fade, the actual DMX values will be stepping down in rounded intensity levels. This process is referred to as quantization.

In faster fades, this may be reasonable, as the effective frame rate of the fade may be high enough to not be seen, but for longer fades, this will likely be noticeable and not suitable for use.

The Problem Gets Worse - Human Perception at Low Light Levels

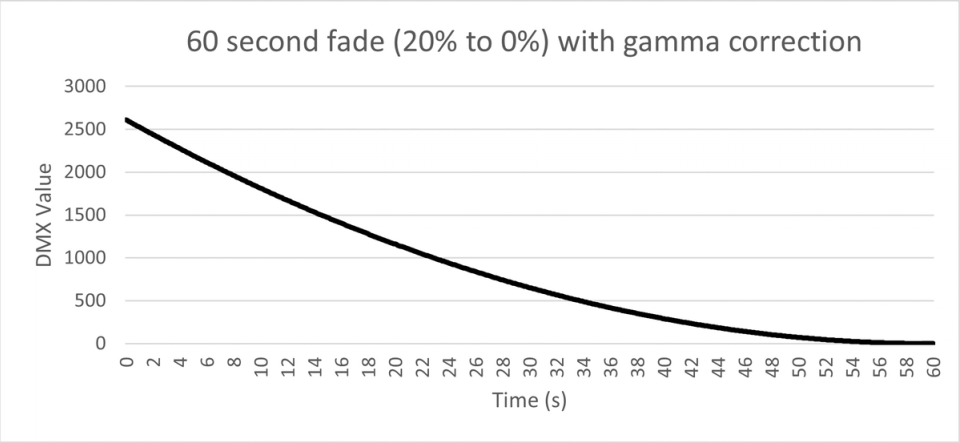

Another important consideration to make when working with LEDs is how we as humans perceive light. The human eye is more sensitive to lower levels of light.

Due to this, if fades are performed linearly (according to the DMX values), then they won’t be perceived linearly according to our eyes.

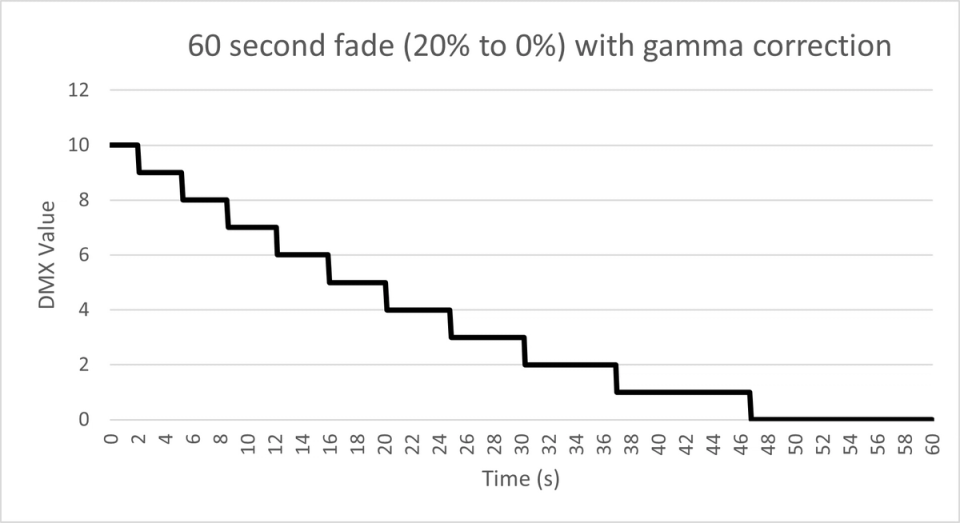

To help mitigate this issue, gamma correction can be implemented.

Gamma correction effectively maps source data to a logarithmic curve before it is displayed, one that represents the human eye’s perception of intensity more accurately.

This technique effectively reduces the difference between lower intensity levels, and so makes colors appear to dim smoother and more accurately.

You can read more about gamma correction in the article: Dithering and Gamma Correction.

Gamma correction, although very useful in many settings, is difficult to implement effectively when working with 8-bit pixels and especially problematic if extended fade times are used.

This is, again, simply because the number of available intensity levels that can be output to the pixels are limited (256 levels in the case of 8-bit pixels).

To help explain this, see the adjusted graph below, which now includes gamma correction. You can see the fade is even less smooth.

Long Fade of Gamma Corrected Dim White to Off

Deviations from the Desired Color Mix

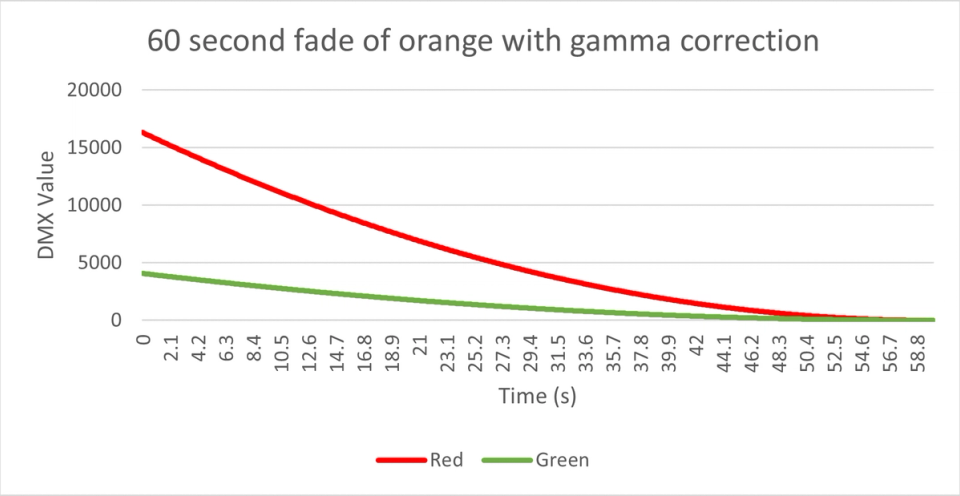

An even mix of red, green, and blue will produce what we perceive as white.

So, in our examples above where we are fading with white, we have only been considering what this looks like with an even mix of red, green, and blue.

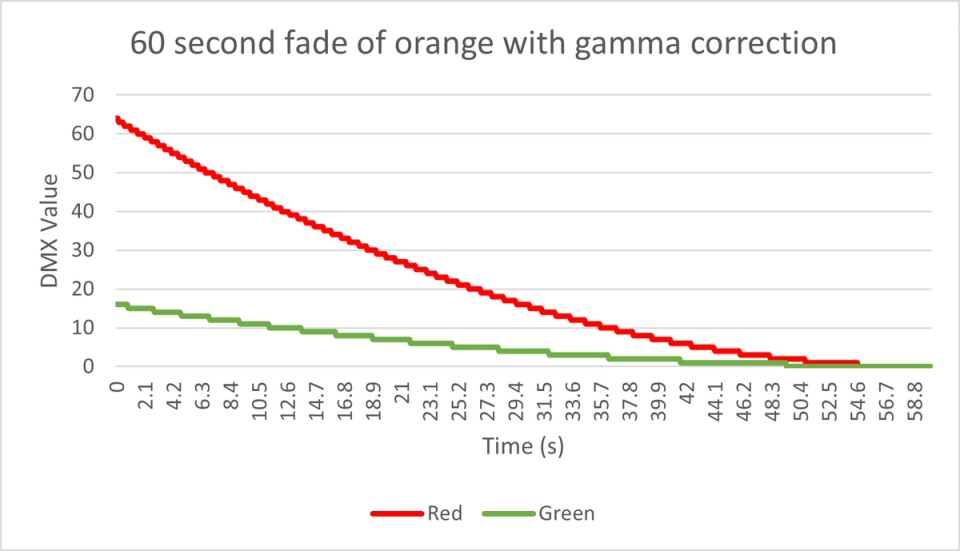

However, in the common situation where you are fading down another color mix, then the fade may actually change color over time.

For example, an ideal orange might be 100% red and 50% green. if we fade down this orange over 60 seconds, and if gamma correction is applied, then you’ll see a curve that looks like the below:

Long Fade of Gamma Corrected Dim Orange to Off

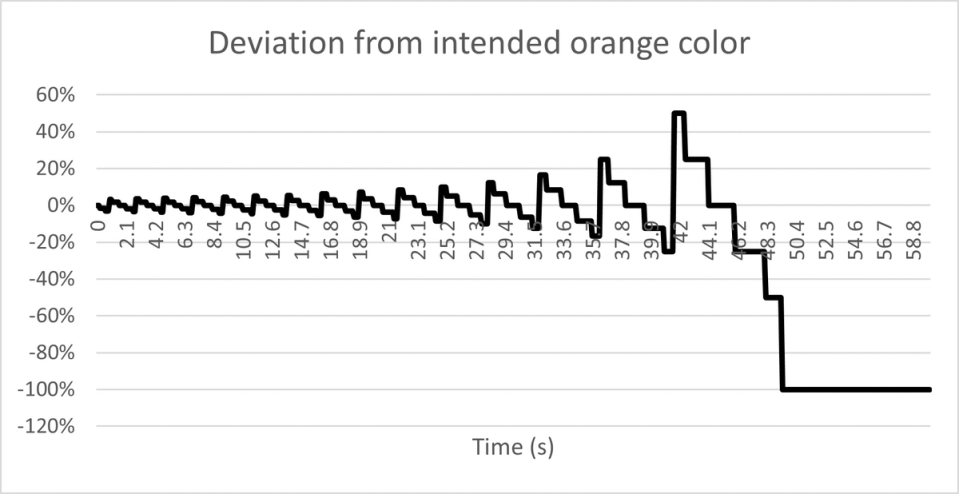

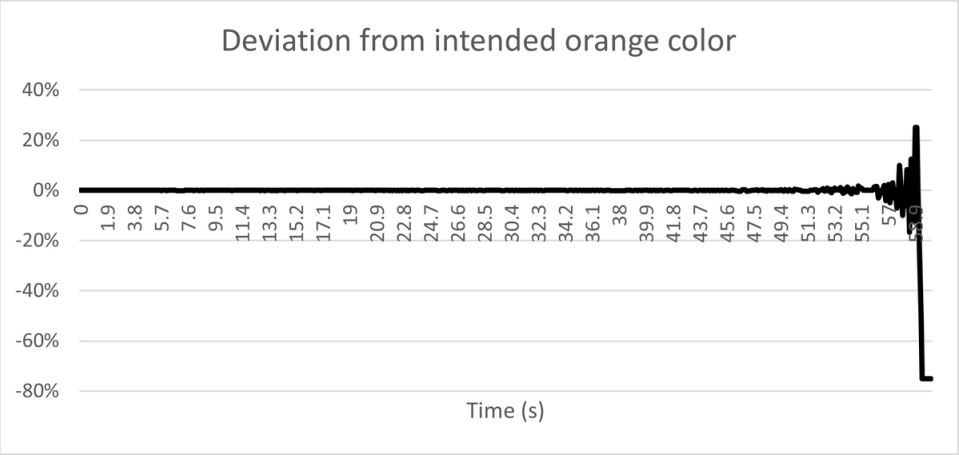

To help visualize the deviations from the ideal, intended orange, the following graph shows deviations as percentages.

Deviations from intended orange during 1 minute fade

Apart from the fact that both red and green will appear “steppy”, with very low effective frame rates, we can see that red and green are stepping down at different times.

This causes a deviation from what the intended color mix should be, because the ratio of red and green is always changing and never what the lighting designer intended.

The most evident deviations in our example are from the 49 second mark, where green is needing to fade down to such a low level, that it jumps to DMX value 0 because of quantization. At this point, between 49 seconds and 54 seconds, the only active color is red. During what was intended to be a long fade of orange, the actual color of the LEDs has now deviated to pure red for 5 seconds.

This is a problem.

Further to this, the red LED will also turn off before it was intended, at the 54 second mark. So, for the last 6 seconds of the intended fade, the pixels will be off.

As you can see, 8-bit pixels are not the ideal choice where extended fades and slow changes to color are required.

The solution - 16 Bit Everything

Using a 16-bit source of lighting data and pairing this with 16-bit pixels means more intensity values are available for the lighting source to dim and apply gamma correction.

A higher resolution reduces “steppiness” and will help to ensure color mixing is maintained and not deviated from.

In our first example of a 60 second fade from 20% white to 0%, you can now see the curve is much smoother, as there are more DMX values available to be used.

60 second fade 20% to 0%

Our second example of the same fade but with gamma correction now also appears smooth.

60 second fade 20% to 0% with gamma correction

And our third example of fading orange down from 100% to 0% shows a much more accurate color mix.

60 second fade of orange with gamma correction

The deviations from the perfectly mixed orange are less extreme, and only will appear in the final few seconds of the fade.

Deviation from intended orange color

Using 16-bit pixels, and driving these with native 16-bit DMX data from the control source will provide the best chance to get those smooth and accurate effects that are so desired in theatrical lighting designs.

There are many pixel protocols that support 16-bit color resolution; to find the protocol that meets your specific needs, see our Glossary of Supported Pixel Protocols.

16 Bit with PixLite® Mk3 Devices

PixLite® Mk3 pixel controllers supports 16-bit pixel protocols, as well as the ability to choose the input data resolution that you can drive the pixels with.

There are two options when working with 16-bit pixels:

Option 1

Use 8-bit DMX data from the source of lighting data, which the PixLite will then be able to map to a gamma-corrected 16-bit dimming curve. This is a great way to easily drive 16-bit fixtures, particularly if the source of lighting data doesn’t support native 16-bit data output. This will, however, still result in “steppiness” during long fades, as the incoming DMX data is a low effective frame rate.

Option 2

The second option is to configure the PixLite’s “Input Data Resolution” to 16-bit. This will allow you to use 2 DMX/Art-Net/sACN channels together to represent intensity of each color, i.e. 6 channels are used for each RGB pixel or 8 channels are used for each RGBW pixel, giving you the ability to directly feed 16-bit data from your source of lighting control to the pixels. This is the optimal way to use PixLite in a theatrical lighting system, or anywhere you require smooth and accurate extended fades.

There are two caveats to operating the PixLite Mk3 in this mode:

- Gamma correction must be done before the PixLite, at the lighting source, so you won’t be able to use PixLite’s gamma correction in this configuration. This is because full control of the 16 bit pixel data is provided to the lighting source.

- The number of universes that the PixLite consumes is twice what it normally is, so you need to be mindful of the maximum number of universes that the controller can support.

PixLite® Mk3 pixel controllers also include an ability to upscale 8-bit data to 9 bits, for pixels that are only 8-bit in resolution.

This is called Dithering, and whilst it will certainly help increase the visual quality of 8-bit pixels when applying gamma correction in the PixLite, if you’re after long fades and slow color changes for theatrical lighting, 16-bit pixels and 16-bit input data are the best choice.